RapidPlan: How Model Quality Can Be Improved

RapidPlan is a smart new way to make the treatment planning process even more efficient (although with a fully integrated system like Varian's, there is not much room for improvement left!)

Since the introduction in April 2016, we have reoptimized several hundred VMAT plans, and have developed four RapidPlan models:

- A prostate model (First published: April 2016/PS)

- A head&neck model (First published: June 2016/HK)

- A model for palliative treatments of the spine (First published: July 2016/HK)

- A prostate model for 10FFF single arc treatments (First published: August 2016/HK)

These four models are currently in clinical use.

A good RapidPlan model will give, among other things, accurate dose estimations. To be able to do this, for model training the algorithm needs a coherent set of input data. A coherent data set can be distilled from a set of training plans where plans have been optimized in a consistent way.

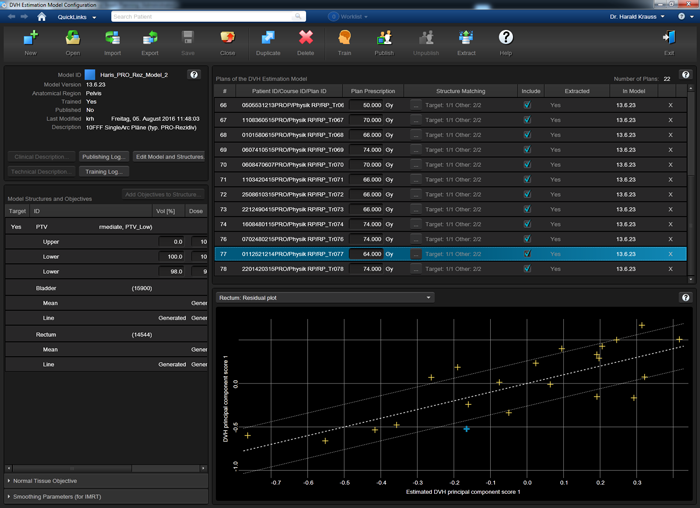

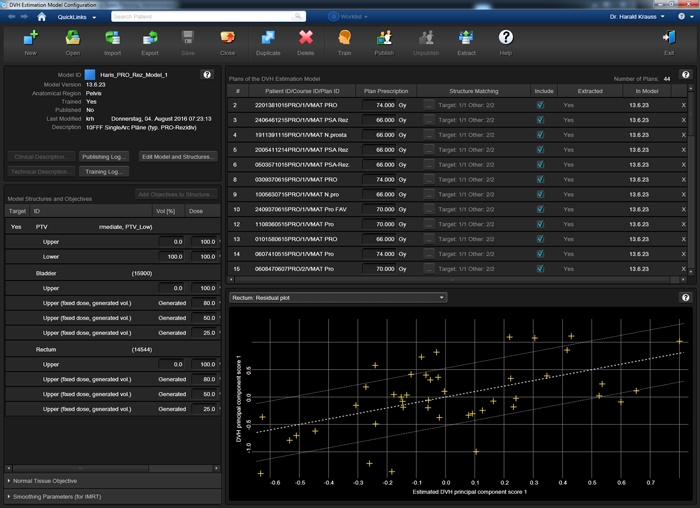

The following image shows the Residual Plot for the structure "Rectum" in our PRO_rez_model. The model is still at a very early stage: the training set holds only 22 plans, two more than the bare minimum of 20:

However, the resulting Coefficient of determination R^2 is 0.595, which is quite high for such a small set1, because the 22 plans were optimized in a consistent way.

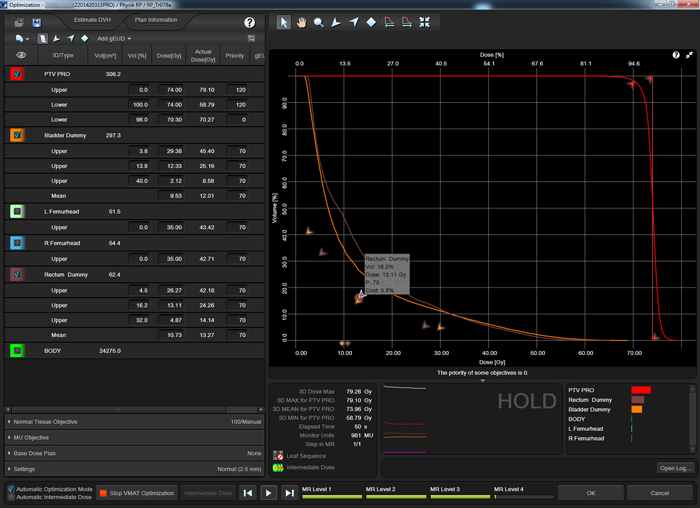

Three of our models used the following optimization strategy: in all plans of the training set, the DVHs of the OARs (e.g., "Rectum Dummy" is the part of the rectum which does not overlap with the PTV with an additional margin of 3 mm) were forced "down" by using several upper objectives, typically three to four. The objectives are placed such that they contribute the same cost to the cost function (5.8% in the screenshot):

This means that during all four levels of the PhotonOptimizer (PO), the upper objectives have to be adjusted regularly with the mouse, so that the cost of each objective always stays constant. This is true interactive optimization!

The result is sort of "constant line force" which acts on the OAR DVH uniformly at all dose levels during optimization. Dose is "squeezed out" of the structure in a very efficient and reproducible way. On line segments of the OAR DVH where PTV objectives prevent further OAR dose reduction, the "counter force" by the PTV increases OAR cost, and OAR objectives need to be relaxed a little. On segments where no counter force is present, OAR cost is low, and the objectives can be tightened until the cost is the same in all segments of the OAR DVH.

By moving around the upper objectives of the OARs several dozen times during an optimization, one eye should always be kept on PTV coverage2. If it starts to break down, OAR objectives have to be relaxed. RapidPlan cannot know what PTV coverage is satisfactory. This is up to the user3.

The absolute cost value which is selected depends on PTV coverage during optimization. If the PO struggles to reach the PTV goal, a smaller target cost value (like 3 to 4%) for the OARs is appropriate. The reason for the struggle could be the unfavorable geometrical relation between the structures in a patient, which cannot be changed. If, on the other hand, there is a large separation between PTV and OARs, the PTV goals will be easily met, and a larger OAR cost target (like 6 - 10%) can be selected.

The following animation illustrates the training plan optimization process. Every few seconds, a screenshot was taken. Intermediate dose calculation is performed in MultiResolution Level 4, to improve accuracy of dose calculation. In a normal optimization, Eclipse would leave the PhotonOptimizer after Level 4 and proceed to final 3D dose calculation. Instead of doing this here, we go on "hold" to keep the process in Level 4 (we have to make sure that the PO does not close). We do some final tuning of the objectives, then release the brake and switch back step by step to Level 1. This time, we let the PO run through all four levels without disturbing it. Performing the "wave" once4 will improve PTV coverage:

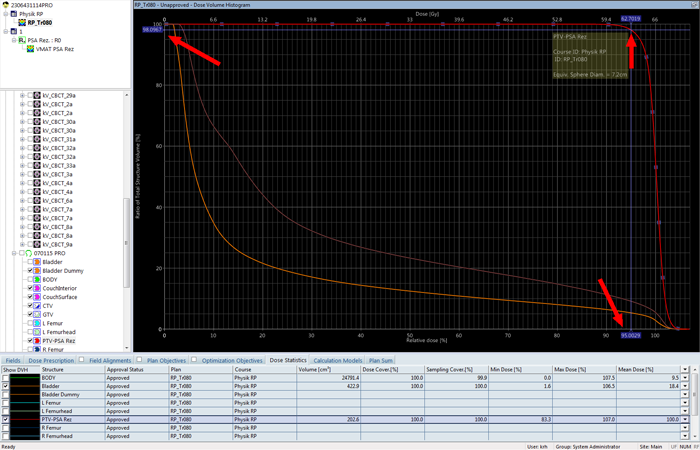

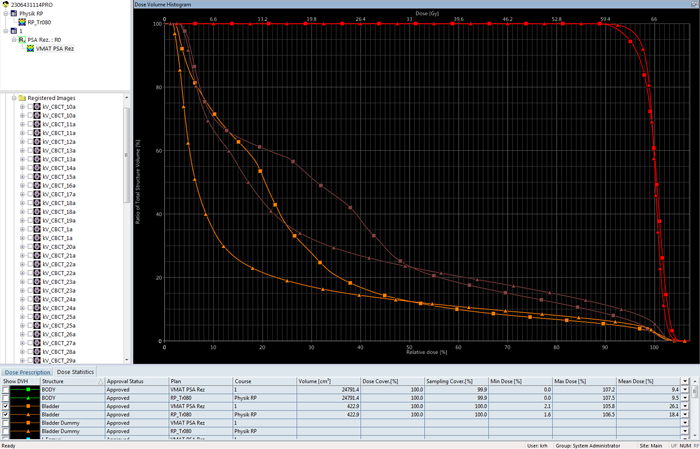

After final 3D dose calculation, the DVH of the PTV is checked. The coverage at 95% (62.7 Gy) of target dose (66 Gy) should be better than 98%:

The DVH comparison of the RapidPlan training plan (triangles) with the clinical plan that actually got treated (squares) shows the improvement in terms of both PTV coverage and OAR sparing over the clinical plan:

This gives confidence that the training plan will be a good plan for the model training set. If the described procedure is repeated for all plans, the training set will contain very consistent data.

Here is a second example of the PO optimization, which also shows the momentary dose distribution:

For comparison, the next image shows a model which was trained a less coherent set of plans. In this model, clinical plans were used which had been optimized by different people using different strategies. The training set contains 44 plans (double the value of the above set), and the coefficient of determination R^2 is only 0.311 for the Rectum:

For the Bladder, R^2 is 0.773 in the "coherent" model after 22 plans, and 0.597 in the "less coherent" model after 44 plans. See the summary of training results for the coherent and less coherent model at this stage.

A word of caution: statistical coefficients, such as R^2 or Chi^2 (not mentioned yet) are not the only indicators of a model's quality. It is not that simple. While it is generally true that values closer to 1 are better, the "hunt for better statistics" (by removing structures or plans from the training set which decrease R^2 or increase Chi^2) can lead to a model which "over-fits the data". This can cause poor estimation capacity of the final model when it is applied to a new case.

Adding more plans to a model will not necessarily increase R^2. When a new plan is added, a new data set is introduced which holds the geometrical and dosimetric information of this plan. If, for instance, a plan is added which has a large overlap region between PTV and bladder, much larger than the previous average, it is likely that by adding this plan and retraining the model, R^2 will decrease. But adding the plan to the training set also adds valuable information about a new clinical situation. It is likely that ModelAnalytics will add a note on this plan, and it should be checked. If nothing is wrong with the plan, the contours are OK, and the optimization strategy was correct, the plan should be kept in the model.

RapidPlan offers a large number of tools which help identify outliers of various kind (geometrical outliers, dosimetrical outliers etc.) and influential data points which could have an adverse effect on the model, and which therefore should be excluded from the model.

Overlapping and Non-Overlapping OAR Structures

All our training plans were optimized in such a way that dose to the non-overlapping part of the OARs is as low as possible. We call the non-overlapping part of an OAR the "dummy", e.g. Dummy Bladder, Dummy Rectum, etc. The part of the OAR which overlaps with the PTV is treated as belonging to the PTV alone, and will therefore receive full PTV dose. No objectives are set for the full OAR, only for the dummy part.

In Contouring, the dummy is generated by cropping away the part of the organ which extends into any PTV, with an additional margin of 3 mm. This means that there will always be a separation of 3 mm between the surfaces of the dummy structure and any PTV structure.

RapidPlan does not need dummy structures. In principle, there is no need to contour them at all. It is the principle of RapidPlan to partition automatically into sub-volumes (in-field, out-of-field, leaf transmission and target overlap) during the extration phase of model training.

The question now is: for which structures should a model be trained, and for which should it generate estimations and objectives? The above screenshots show a mixed situation: while the plans in the training set were optimized on the dummy structures, the model generated estimations and line objectives for the complete OAR structures. While this is perfectly OK, we found during model validation that it is better to train the dummies (just like in the training set), and let the model generate estimations and line objectives for the dummies alone.

Although RapidPlan should "know" abbout the target overlap regions, the results are different: Models which were focussing on the dummies alone gave consistently better results (better PTV coverage) than models which were using the complete OARs and let RapidPlan take care of the overlap regions.

Objectives Generated by the Model

The OAR objectives which will be automatically generated by the trained model when it is applied to a new plan can be either line objectives or, similar to the objectives which were used in the training plans, a set of three to four upper objectives. We currently experiment with both versions.

We prefer to use fixed priorities with the generated obgectives. The values are chosen similar to the training priorities (120 for the PTV5 and 70 for the OARs). By tweaking these values, final plan quality can be optimized.

Notes

1This does not mean that R^2 will automatically increase when more plans are added to the model!

2We place a dummy lower objective at 98% volume, 95% of target dose with zero priority to guide the eye.

3However, if PTV dose is not homogeneous in a certain plan, ModelAnalytics will "complain" that dose homogeneity within the PTV seems lower than average. This can be an indication that the training plan has too much emphasis on saving dose to the OARs. In the report generated by ModelAnalytics for the consistent model after 22 plans no such complaint can be found.

4Excessive use of the "wave" increases the probability of hot spots, even for VMAT.

5It is worth noting that even in the most demanding head&neck model, it is generally not necessary to increase priorities above 150 to achieve good PTV coverage.